- initialize weights \(\alpha_{i} = \frac{1}{N}\)

- for \(t \in 1 … T\)

- learn this-round classifier \(f_{t}\qty(x)\) on data weights \(\alpha_{i}\)

- recompute classifier coefficient \(\hat{w}_{t}\)

- recompute weights \(\alpha_{i}\)

- normalize weights \(\alpha_{i} = \frac{\alpha_{i}}{\sum_{j=1}^{N} \alpha_{j}}\)

- final model predicts via \(\hat{y} = \text{sign}\qty(\sum_{t=1}^{T} \hat{w}_{t}f_{t}\qty(x))\) for classifier with \(T\) rounds

After some iterations. This algorithm only works on binary classification and eventually gets exponential loss / 0/1 loss. gradient boosting will eventually allow a more general form.

convergence theorem

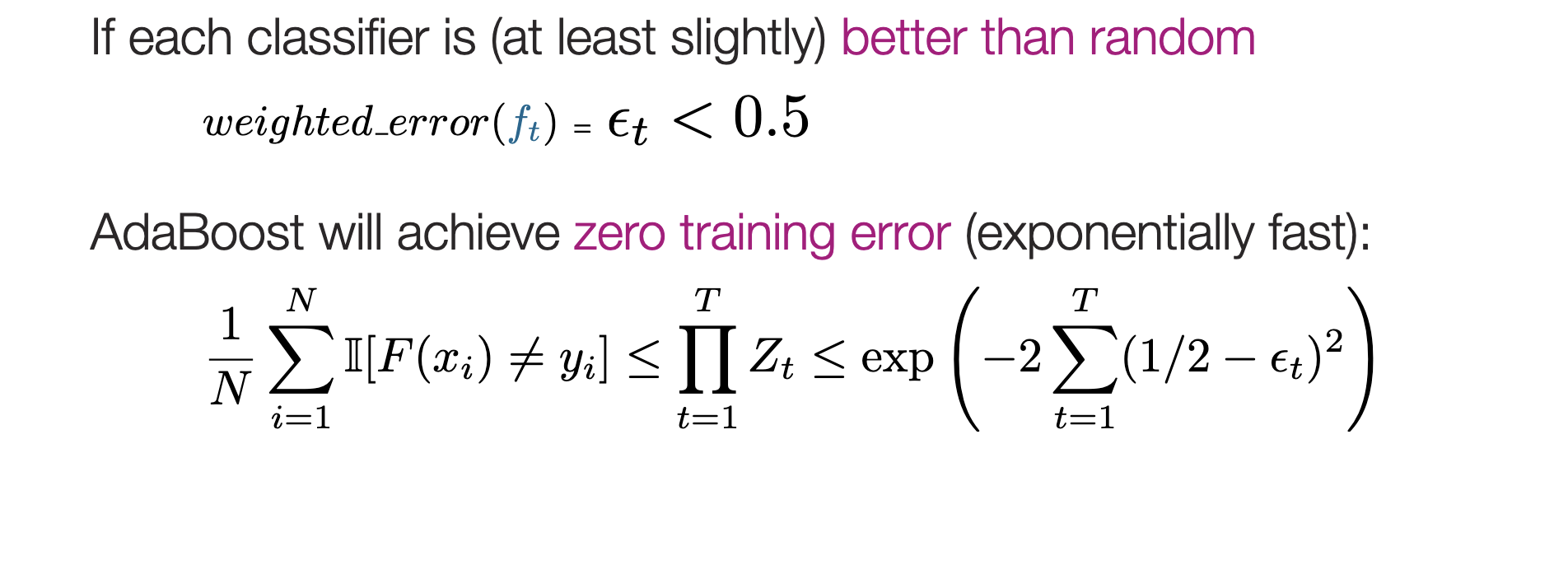

At every \(t\), if we can find a weak learner with weighted error < 0.5 for all rounds, then the AdaBoost converges.

The training error of the classifier after training via AdaBoost (left term) is bounded by:

\begin{equation} \frac{1}{N} \sum_{i=1}^{N} 1 \qty {F\qty(x_{i}) \neq y_{i}} \leq \frac{1}{N} \sum_{i=1}^{N} \exp \qty(-y_{i}\text{score}\qty(x_{i})) = \prod_{t=1}^{T} \sum_{i=1}^{N} \alpha_{i,t} \exp \qty(-\hat{w}_{t} y_{i} f_{t}\qty(x_{i})) \end{equation}

where score is the resembling expression: \(\text{score}\qty(x) = \sum_{t}^{} \hat{w}_{t}f_{t}\qty(x) ; F\qty(x) = \text{sign}\qty(score\qty(x))\)

Sketch:

Notice that \(y_{i}score\qty(x_{i})\) is a proxy for whether or not \(F\qty(x_{i}) \neq y_{i}\) (the expression is only negative during disagreement)

And so \(e^{-y_{i}\text{score}\qty(x_{i})}\) is an exponential loss for the binary classification taskthat goes exponentially high when the scores are really not agreeing in sight with \(y_{i}\). This gives a upper bound on the zero-one loss on the left, allowing us to:

in order for us to have a tight error bound, we should therefore minimize the rightmost term as a function of \(\hat{w}_{t}\) and \(a_{i,t}\). Turns out, if you do that, we get that the optimal \(w_{i} = \frac{1}{2} \ln \qty(\frac{1-\varepsilon_{t}}{\varepsilon_{t}})\).

classifier weighting

If \(f_{t}\qty(x)\) is good, then set \(\hat{w}_{t}\) to be large; otherwise, set \(\hat{w}_{t}\) to be small. We can write:

\begin{equation} \hat{w}_{t} = \frac{1}{2} \ln \qty( \frac{1- \text{weighted\_error}\qty(f_{t})}{\text{weighted\_error}\qty(f_{t})} ) \end{equation}

This expression has the following properties: a great classifier is upweighted, a random classifier has weight that tends to zero, and a terrible classifier is automatically inverted. Notice that also if we have \(0\) weighted error, \(\hat{w}_{t} \to \infty\), which is nice.

| Classifier Quality | Weight |

|---|---|

| good | positive high |

| random | zero |

| bad (inversely good) | negative high |

sample weighting

\begin{equation} \alpha_{i} = \begin{cases} \alpha_{i} e^{-\hat{w}_{t}}, \text{if correct}\\ \alpha_{i} e^{\hat{w}_{t}}, \text{if mistake} \end{cases} \end{equation}

The intuition:

| Sample Correct | Classifier Quality | Weight |

|---|---|---|

| yes | good | decrease |

| yes | random | stays the same |

| yes | bad | increase |

| no | good | increase |

| no | random | stays the same |

| no | bad | decrease |

after every iteration, we normalize weights to 1 $αi = $.

weighting?

- gradient descent: should weight contribution \(i\) by \(\alpha_{i}\) in gradient.

- decision trees: weight the classification error on point \(i\) by \(\alpha_{i}\)