Introduction

Recent advances of language models (LMs) introduced the possibility of in-context, few or zero-shot reasoning (Brown et al. 2020) using LMs without much or any fine tuning.

Yet, classically, LM decoding takes place in a left-to-right fashion, auto-regressively resolving one token at a time by sampling from the output distribution of possible next words without multi-step planning.

Work in LM agents have taken steps to solve more complex problems that would typically require multi-step reasoning even while using this direct decoding approach. The simplest idea, named “chain-of-thoughts” (CoT), involves forcing the LM at decode time to begin the decoding process with natural language reasoning about its actions (Wei et al. 2022). The method has contributed to the creation of powerful language agents (Yao, Zhao, et al. 2023) that can reason about complex actions.

Despite the relative success of CoT, the scheme still does not support any kind of backtracking as it samples directly from the LM’s posterior distribution. When a problem requires a significantly large number of steps to solve, issues relating to “de-generation” (Holtzman et al. 2020) becomes increasingly prevalent: whereby, naive maximizations of sequence likelihood results in a most likely sub-phrase being repeated which does not contribute to increased information or progress on a problem.

Recent theoretical work suggests these types of degeneration arises due to the distortion of output probability density caused by the last-layer softmax projection into the probability simplex (Finlayson et al. 2023): due the lower degrees of freedom offered by a probability syntax, both high and low tails of the latent next-word distribution becomes emphasized in the output probability distribution.

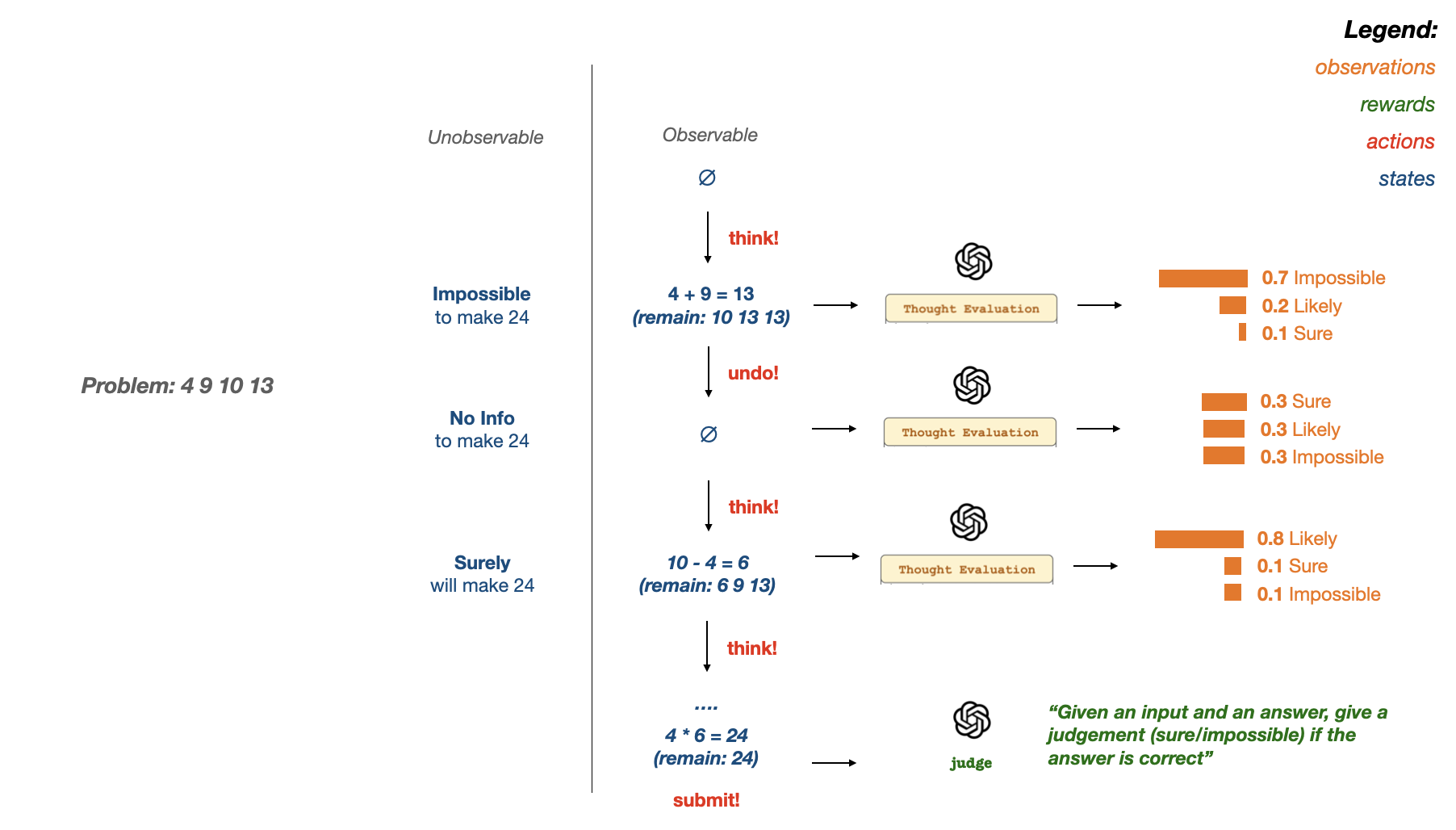

To address this, recent approaches such as Tree of Thoughts (ToT) (Yao, Yu, et al. 2023) have separated the process of next-step proposal (“thinking”) and choosing the actual step to take given a situation (“reasoning”). This separate allows the representation of a problem through only short decoding sequences that are less prone to degeneration, while allowing a separate LM call to score the value of being at any given partial solution through a maximum-likely single-token output that is less likely to be distorted.

In this work, we extend the ToT prompting scheme to formalize this process of “thinking” and “reasoning” via a Language Model as a Partially Observable Markov Decision Process (POMDP). We call this decoding scheme the Lookahead Sampler (LS).

The key underlying assumption of the proposed LS scheme involves the claim that LMs are able to make judgments about the value of a subsequence towards solving a problem by analyzing the likelihood of a particular sequence against a judgment of value. This assumption is supported by the existence of reinforcement learning formulations of LM-on-LM output verification—both for reasoning ((Verma et al. 2022)) and hallucination ((Liu et al. 2022))–as well as the use of LM-inferred state value heuristics in the ToS approach.

We leverage this assumption by, similar to ToT, using the LM’s evaluation of the likelihood of a sequence (similar to LM “scoring” of a “thought” in ToT) as a heuristic for the coherence and reasoning within a subsequence of LM output—forming a “self reflection” scheme similar to other LM-scoring schemes previously proposed (Paul et al. 2023; Shinn, Labash, and Gopinath 2023). Yet, differing from ToT, we explicitly formulate this scoring by an LM as an “observation” of an unobservable underlying latent understanding of the input sequence.

By solving the LS problem with the anytime POMCP solver (Silver and Veness 2010), we further demonstrate that LS exhibits stronger anytime characteristics on the Game of 24 task as compared to ToT while maintaining performance that is comparable to ToT and superior to CoT. Lastly, we were able to obtain these results at lower costs to ToT evaluations by using a hybrid language modeling approach by using a larger language model, GPT-4, for posterior sampling and evaluation while using a smaller language model, GPT-3.5-Turbo-Instruct, as the “thought” generator.

Tree of Thoughts

We provide here a short summary of the Tree of Thoughts (ToT) (Yao, Yu, et al. 2023) approach that is relevant to our model. ToT offers a scheme to enable multi-step reasoning with LMs by presenting a decomposition of multi-step LM reasoning into individual steps which is then combined through classic approaches in search and planning.

Specifically, ToT represents a given problem as a finite-horizon planning problem which it then solves in four broad steps.

Thought Decomposition: by leveraging problem-specific characteristics, each problem is decomposed into distinct, incremental steps towards a solution. For the “Game of 24” task, for instance, each “thought” is considered a line of equation which contributes to the overall task of combining four numbers to reach 24.

Now, let \(p_{\theta}\) be our language model, \(s_{j}^{(i)}\) be thought candidate \(j\) of step \(i\) of a decomposed problem, \(s_{*}^{(i)}\) the optimal thought to continue from at step \(i\), \(\tau_{ *} = \qty[s^{(1)}_{ *}, …, s^{(n)}_{ *}]\) a “solution” to a given problem.

Thought Generation: multiple, initial short decodings of a LM—sampling from \(s’ \sim p_{\theta}^{thought}\qty(s^{(i+1)} | s^{(i)})\) is obtained which forms a series of next states (“thoughts”) which encodes a partial step towards the solution which is reachable at any given state.

Thought Evaluation: another LM call rates each of the possible next states for their chance in reaching the solution; specifically, we ask the LM to reason about a given state by calculating the posterior probabilities of predicting a specific judgement of value (the words “sure”“likely”“impossible”) given that state; that is: \(V(s_{j}) = \arg\max_{o} \{p^{value}_\theta(o|s_{j}), o \in \{ sure, likely, impossible\}, \forall j \in 1 … n\).

Problem Solving: finally, given this heuristic, solving a specific problem in ToT involves using a search-and-planning scheme (specifically, DFS or BFS) to cycle between generation and evaluation of thoughts until a terminal thought is reached. Branches on the DFS tree is pruned if they are voted as “impossible”.

By combining explicit planning and LM reasoning, this approach achieved state-of-the-art results on the Game of 24 and other difficult natural-language tasks such as a crossword. However, the ToT approach does not incorporate any form of heuristic-guided preferential planning between different “possible” states—in contrast to dynamic approaches which preferentially explore sequences of high probability of success.

Methods

Problem Formulation

Our work formalizes and augments the stepwise decoding scheme proposed by ToT as a Partially Observable Markov Decision Process (POMDP) (Kaelbling, Littman, and Cassandra 1998). A POMDP is a search and planning formulation which emphasizes the uncertain nature of intermediate steps by formalizing each problem into a tuple \((\mathcal{S}, \mathcal{A}, \mathcal{O}, \mathcal{T}, \mathcal{R}, \gamma, s_0)\).

We specifically define our problem formulation as follows:

- \(\mathcal{S} = S \times U\), where \(s \in S\) is each sub-step of our decomposed problem and \(u \in U\) representing the unmeasurable, true underlying value being estimated by \(V(s)\) in ToT representing the usefulness of a particular thought

- \(\mathcal{A} = [a_0, a_1, a_2]\), a discrete set of possible problem-solving actions—to “continue” expanding a particular branch \(a_0\), to “rollback” to previous branch \(a_1\), or to “think” a new thought at the current branch \(a_2\).

- \(\mathcal{O} \in S \times U\), exactly the same as \(\mathcal{S}\), but instead of the unobservable underlying value of a given \(s\) we obtain \(V(s)\) instead from the language model by asking the language model for its judgement regarding a state; importantly, because the observations are simply a sample on the distribution, we can directly use \(V(s_{j}) \sim p^{value}_\theta(o|s_{j}), o \in \{ sure, likely, impossible\}, \forall j \in 1 … n\)

- \(\mathcal{T}\) is given deterministically given the state and action—“continue” appends the current state to the solution trajectory, yielding a new subproblem, and calculates a new thought; “rollback” pops the last item in the solution trajectory back into the current state and reverts to the previous subproblem; “think” reformulates a new state given the current subproblem

- \(\mathcal{R}\) is given by a language model evaluator given a final trajectory, where: \(r_{\max}\) is given if the LM believes a trajectory successfully solves the problem, and \(r_{\min}\) is given if the LM believes a trajectory failed to solve the problem

Lastly, for this problem, we set discount \(\gamma\) to \(1\) to maximize joint reward, and \(s_0\) would be the initial, unsolved problem.

Modified POMCP

To solve the formalization given above in an actual problem, we chose the POMCP solver (Silver and Veness 2010). This solver is chosen for three primary reasons.

First, by only needing actions and observation sequences as input, the solver requires no explicit distribution on observation and transitions, meaning that simply making concrete samples from the language model posterior is enough to take advantage of its distributional nature.

Second, the POMCP solver has excellent anytime performance characteristics; the search tree for possible solutions will prioritize most possible solutions as rated by intermediate value, but can expand to (at the worst case) an exhaustive search of all possible intermediate states. In particular, easier problems will have stronger heuristic signals, which typically will take less time to solve; this means that a cutoff could be specified by the user to control the speed/accuracy trade-off in solving a given problems.

Specifically, a POMCP solver collects a tree based on sequences of actions and their resulting observations \(h = \{a_1, o_1, …\}\). When planning for a specific action, the scheme samples a series of possible next states from a generative model given your current state and action \(s’ \sim T(\cdot | s,a)\) and calculates reward \(R(s,a)\) from current state.

Once this procedure grows the tree to a certain depth, a point-wise value estimate is calculated from a roll-out.

For this specific problem, we modify the typical “rollout” rollout procedure by essentially performing CoT reasoning with a weighted average of the possible rewards in obtained in the end:

\begin{algorithm} \caption{obtain a value estimate at some leaf state $s_{f}$}\label{alg:cap} \begin{algorithmic} \Ensure $d > f$ \State $s = s_{f}$ \State $L \gets d-n$ \Comment{Depth Remaning in Rollout} \State $\tau \gets \{s_0, \dots, s_{f}\}$ \While{$L \neq 0$} \State $\tau \gets \tau \cup \left\{\arg\max_{s’} \qty(p^{thought}_{\theta}(s’|s))\right\}$ \State $s = s’$ \State $L \gets L-1$ \EndWhile \State $V = \frac{R_{\max} \cdot p_{\theta}^{evaluate}(\tau^{*}|\tau)+R_{\min} \cdot p_{\theta}^{evaluate}(\neg\tau^{*}|\tau)}{R_{\max}+R_{\min}}$\Comment{LM-Posterior Weighted Average of Possible Reward} \State \Return $V$ \end{algorithmic} \end{algorithm}

where, \(p_{\theta}^{thought}\) is the “thought” generation prompt previously discussed, and \(p_{\theta}^{evaluate}\) is the evaluation prompt to check if a particular trajectory truly solves the target task which is also used in reward calculation. Recall that \(\tau^{*}\) represents a trajectory which answers the given question correctly.

As CoT is a reasonably performant reasoning procedure that is relatively lightweight to compute, we believe it would serve to raise the lower bound of possible values, and therefore aid the speed of solution in POMCP.

Task Setup

Similar to ToT, we are going to use the Game of 24 as a difficult multi-step reasoning task with which to test the scheme proposed.

The Game of 24 is a mathematical reasoning game, which uses four numbers and basic arithmetic operations to obtain a value of 24. For instance, for the problem of 4 9 10 13, a solution trajectory may look as follows:

- \(s_0\): subproblem: 4 9 10 13

- \(s_1\): \(13-9=4\), subproblem: 4 4 10

- \(s_2\): \(10-6 = 6\), subproblem: 4 6

- \(s_3\): \(4 \cdot 6\), subproblem: 24

which concludes the solution.

Data Source: in order to maintain comparability to ToT, we leverage the exact dataset curated by Yao et. al. scraped from 4nums.com as our problem set as well. Importantly, the data is sorted by rate of difficulty (as measured by weighted average time for solution)

Benchmark: the “success rate” metric reported involves the success rate across 100 games, corresponding to the metric reported by ToT. Additionally, we also report time-to-solve metrics as measured by the time between the initialization of an empty POMCP tree to obtaining a proposed solution from the scheme.

Language Modeling: distinct from ToT, to perform language model, we use two separate language models. \(p_{\theta}^{evaluate}\) and \(p_{\theta}^{value}\) (for \(\mathcal{R}\) and \(\mathcal{O}\) respectively) were computed using GPT-4-Turbo (1106), and \(p_{\theta}^{thought}\) was computed using GPT-3.5-Turbo-Instruct (0914). This hybrid approach allows for single-token only inference on the larger GPT-4 models, affording dramatic performance improvements.

Solving: we performed language model inference through the OpenAI Azure Cognitive Services API and used the POMDPs.jl, BasicPOMCP.jl Julia packages for the orchestration of the solver.

Results

| Method | Success |

|---|---|

| CoT | 4.0% |

| ToT (b=1) | 45% |

| ToT (b=5) | 74% |

| LS (ours) | TODO |

[plot of dificulty vs time]

As shown in [the table], we have [results]. Specifically, we have [these results, which are hopefull ygood]—far exceeding results from CoT (Wei et al. 2022) and are compatible with the approach in ToT.

Furthermore, Figure [figure] shows the anytime nature of the proposed solver. As problem difficulty (as rated by solution time-weighted percentage of 4nums.com users’ solutions) increases, the time it requires for our solver to identify the correct answer increases as well.

Conclusion

In this work, we propose Lookahead Sampler (LS), a novel language model decoding scheme that extends ToS (Yao, Yu, et al. 2023) which leverages a large language model’s self-reflective reasoning capabilities (Paul et al. 2023; Shinn, Labash, and Gopinath 2023) to guide multi-hop reasoning about a problem.

We formalize our approach through the POMDP framework (Kaelbling, Littman, and Cassandra 1998), and demonstrate comparable performance of our approach on the Game of 24 problem to ToT through using the online POMCP (Silver and Veness 2010) solver. Because of the anytime behavior of POMCP, we are able to demonstrate anytime scaling properties of our solver’s behavior: more difficult problems takes longer and more LM inferences to solve. Taken together, these properties makes LS a more flexible approach to solving basic multi-step reasoning tasks as compared to previous approaches—allowing for contemporary LMs to solve more complex problems.

In its current form, this work has two key limitations. First, similar to that proposed by ToT, the approach is still significantly more computationally expensive than CoT or other direct decoding approaches; therefore, these search techniques is likely unnecessary for problems which can be solved with high accuracy using simpler techniques. Second, posterior distributions—even when taking only top-k samples for extremely small k—are still meaningful only on billion-parameter models if used without additional fine tuning (Hu and Levy 2023): making the heuristic-driven performance improvements of LS limited in scope. With additional fine-tuning of surrogate value models, LS could likely perform dramatically more efficiently while obtaining its positive characteristics in solution quality.