Elimination Matricies

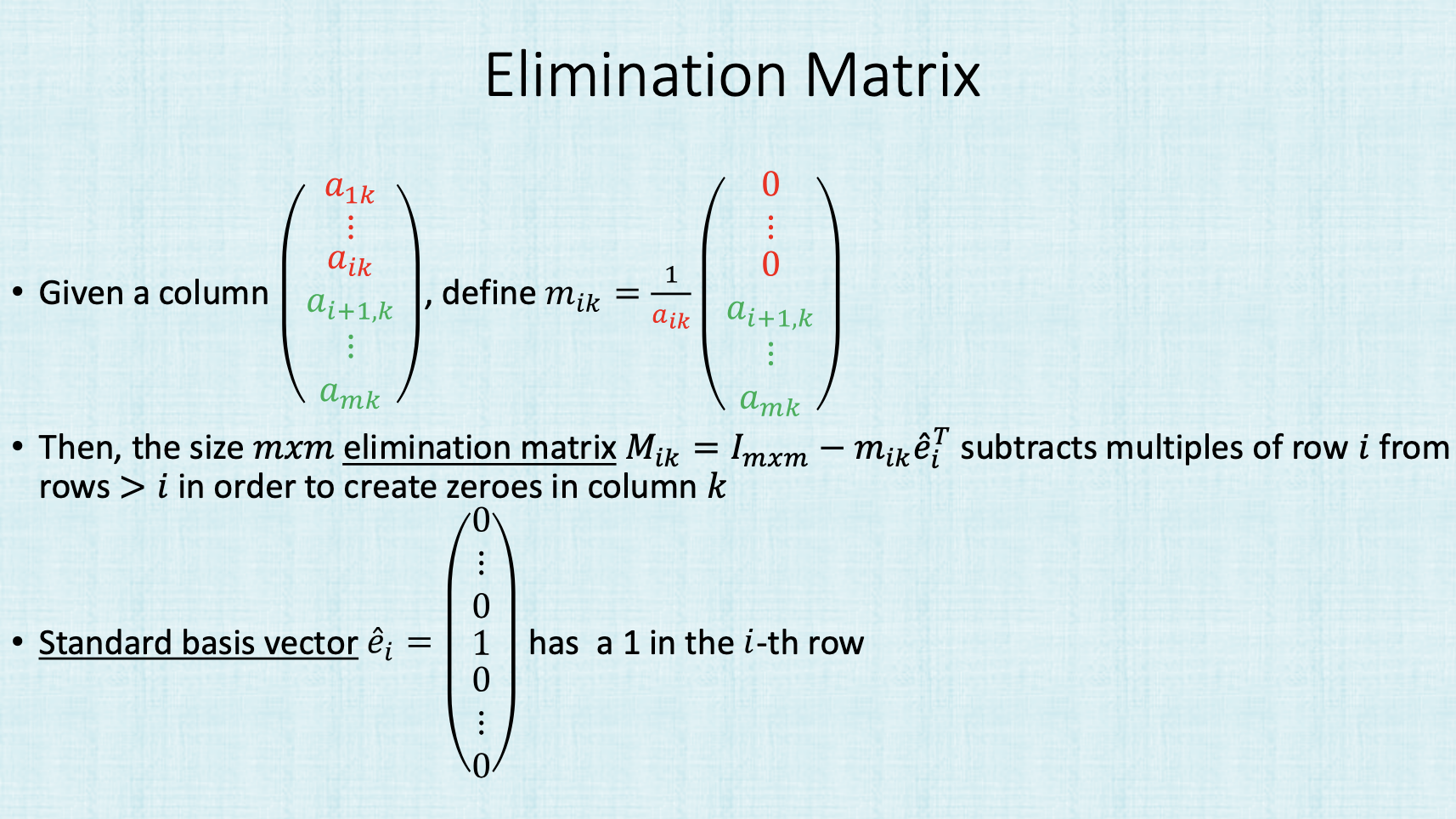

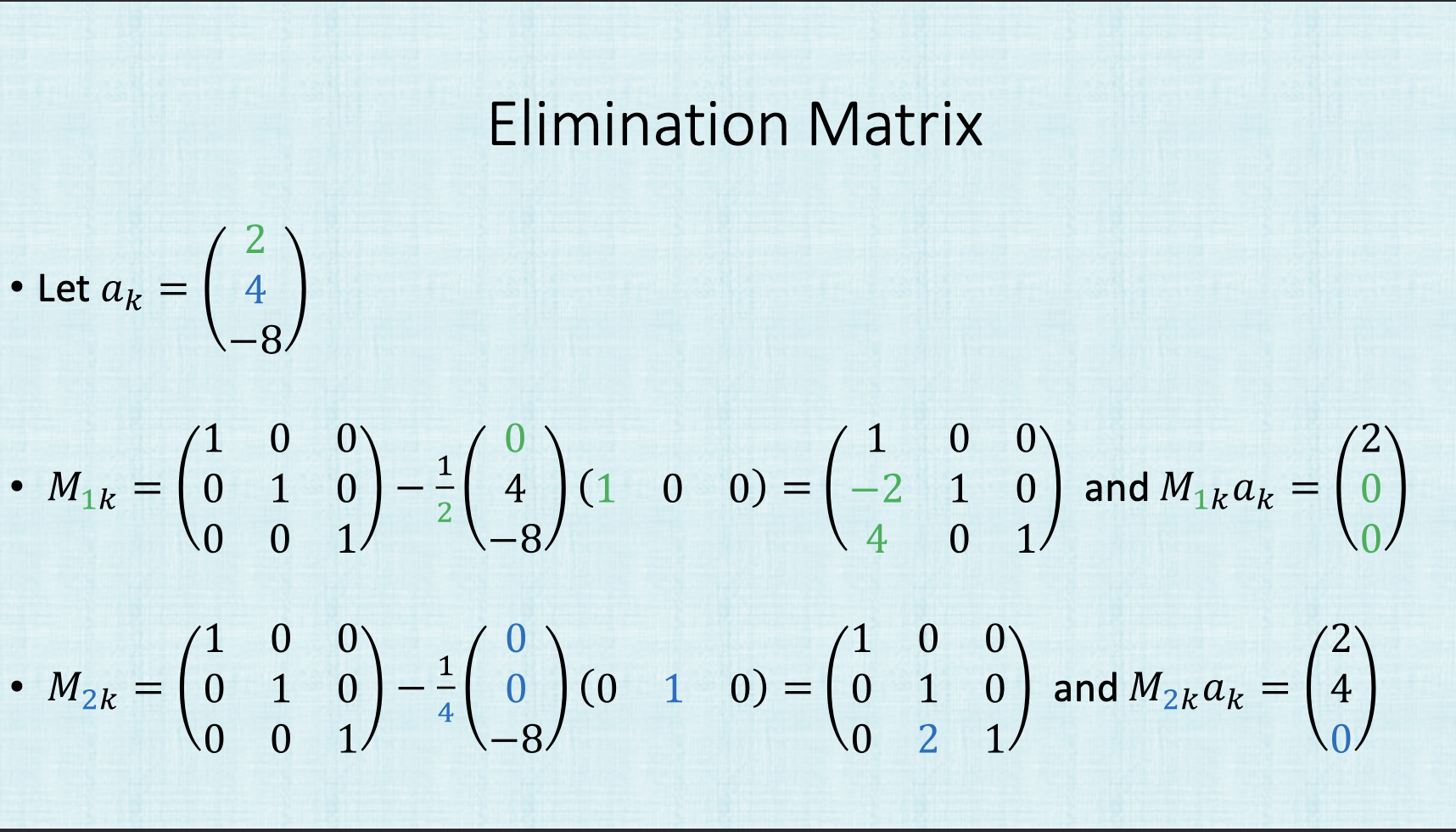

Last edited: August 8, 2025For matrix \(A\), we want to make a series of matrix \(M\) which will zero columns out. This is a algorithms approach for doing this, which is also applied columnwise.

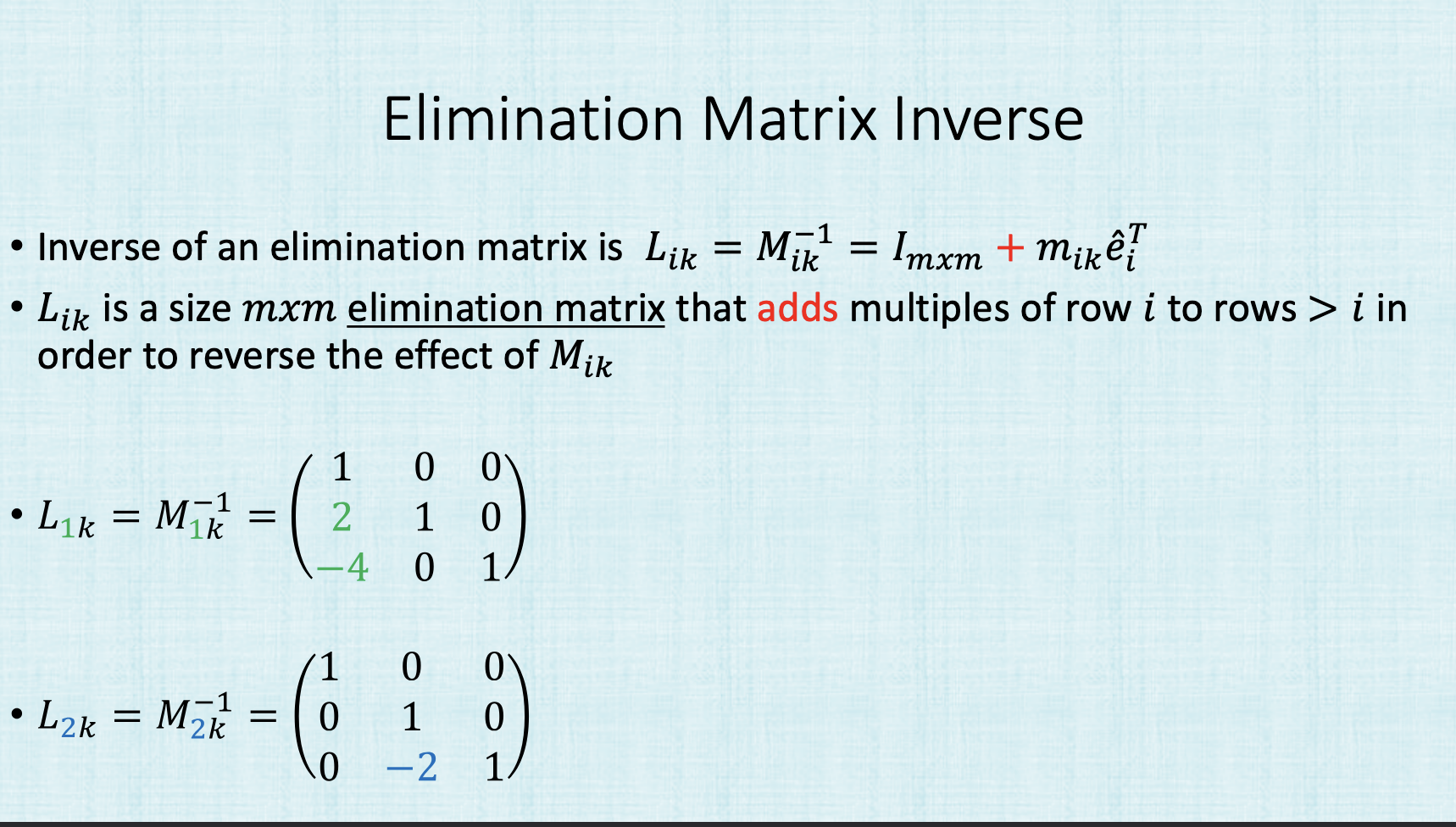

nicely, we can undo our operations

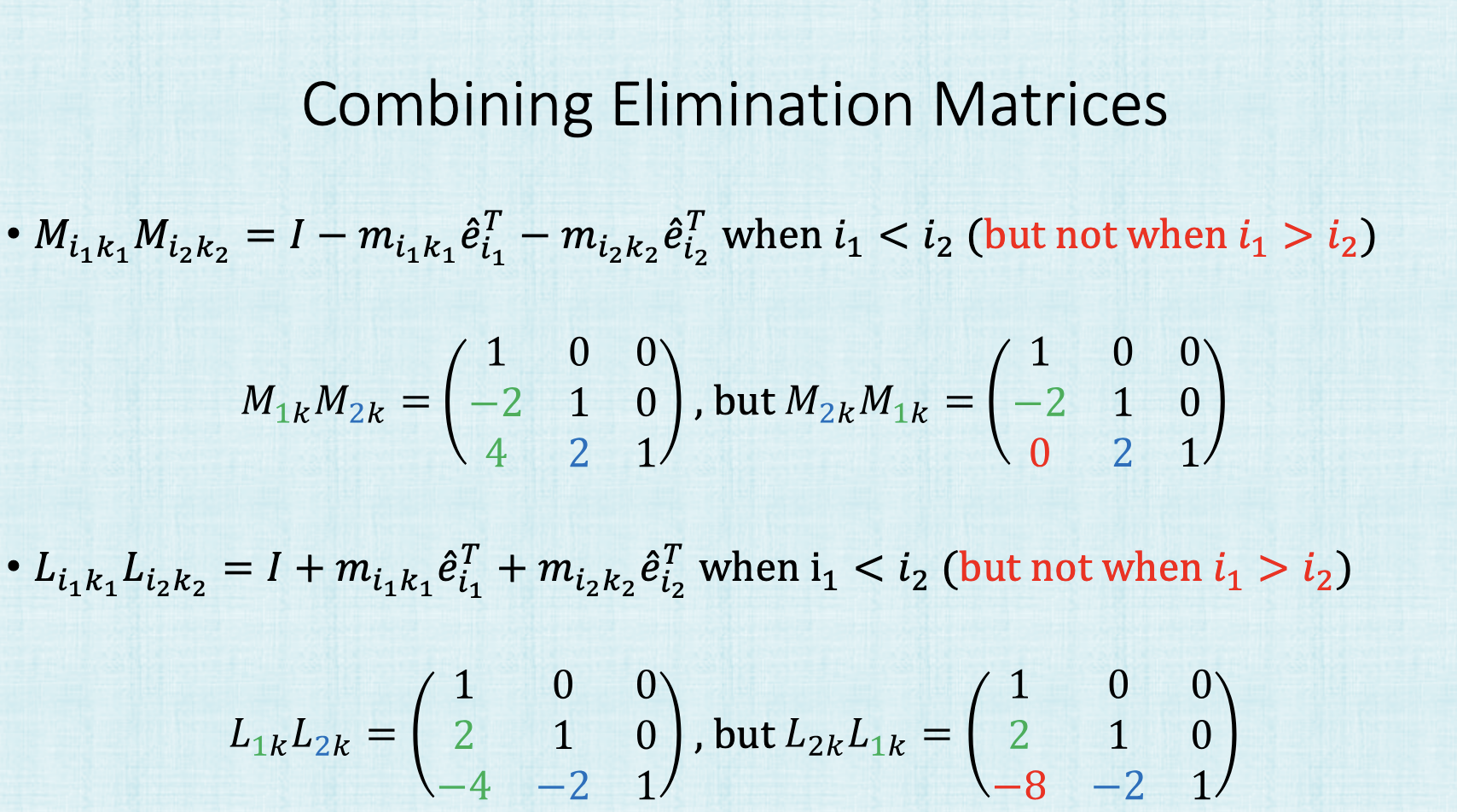

and you can compose them by subtracting together

*THIS IS ONLY TRUE when we are applying in the right ordering, row \(1\) to row \(2\), etc.

pivoting

this procedure breaks on:

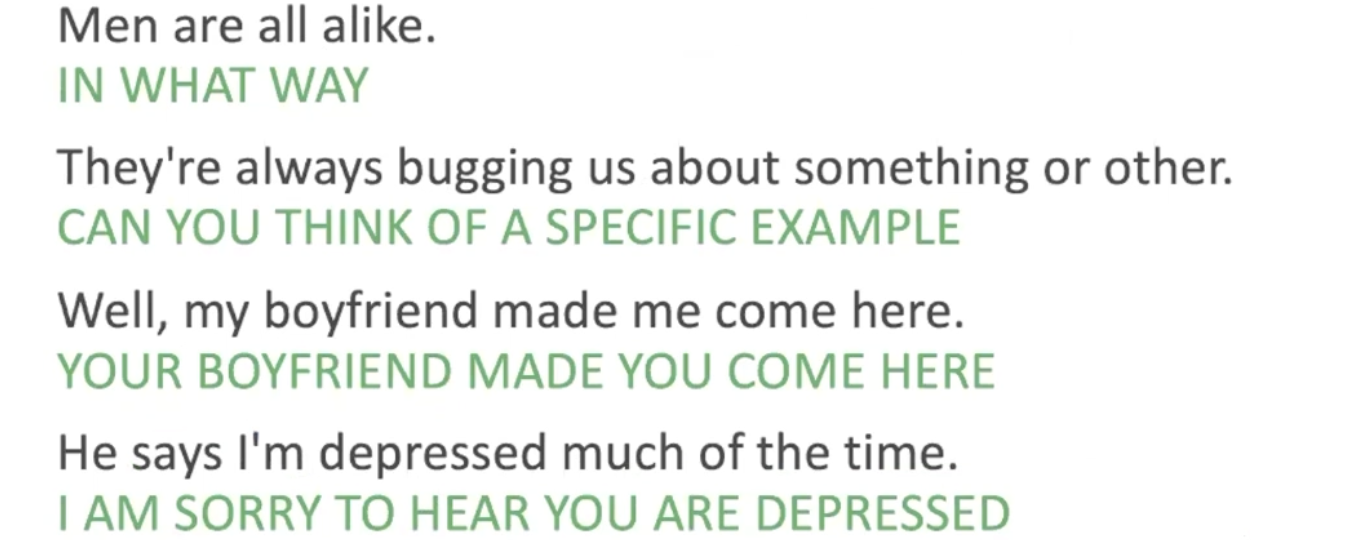

ELIZA

Last edited: August 8, 2025Wizenbaum (1966)

works pattern-action rules by rephrasing user’s questions

“You hate me” => “what makes you think I hate you”

Rogerian psycotherapy: assume no real-world knowledge; simply draws out patient’s statements

I need X => what would it mean to you if you got X.

uses regex

capture specific adjectives, “all”, “always”, etc. and responds accordingly

Eliza Rules

patterns are organized by keywords: a keyword has a pattern and a list of transforms:

Ella Baker

Last edited: August 8, 2025A civil rights movement organizer that founded SNICK.

Elo Ratings

Last edited: August 8, 2025emacs talk

Last edited: August 8, 2025Hello