mixed-autonomy traffic

Last edited: December 12, 2025Vehicle Platooning

advantages:

- reduce conjection

- greater fuel economy

hierarchical control of platoons

- vehicle: lat and long

- link: formulation, splitting, reordering

- network: planning, goods assignment, etc.

Goal: can we dynamically form platoons that minimizes travel time while minimizing fuel cost?

Traffic Coordination with MARL

To coordinate UAVs, we can formulate it as a Decentralized POMDP Problem. Key insight: rollout both you and a simulation of others.

Also run MPC with trurcated rollouts

MoE Review Index

Last edited: December 12, 2025Project Thoughts

Overall Question: “Why is growing a better idea than training larger models from scratch?”

Cost of Specialization

Sub-Question: “how much does load balancing loss incur in terms of performance versus specialized data?”

- For our goals, our data is much more specific (i.e. personalized), meaning we don’t necessarily need to rely on the ModuleFormer load balancing loss tricks.

- Switch Transformers tells us that standard regularization, including dropout, means that one expert can be sufficient to answer many questions (perhaps 1+1 like in shared expert setups)

How Much Expert is an Expert?

Sub-Question: “do all experts have to have the same representational power?”

MOEReview Fedus: Switch Transformers

Last edited: December 12, 2025At scale, with regularization (including dropout), k=1 on expert routing is fine!

MOEReview Gale: MegaBlocks

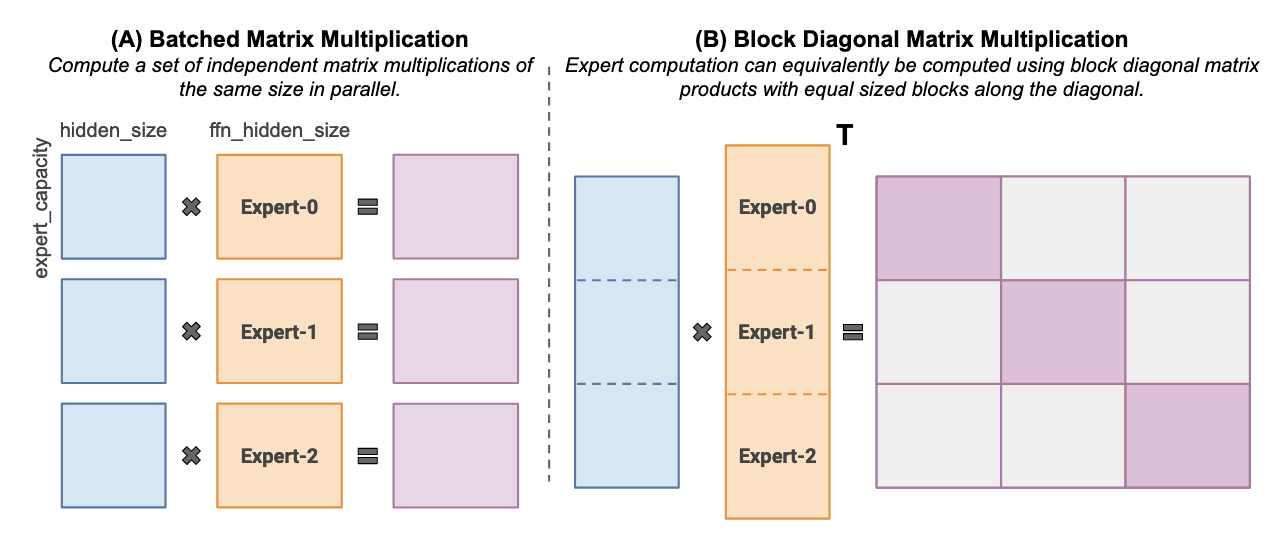

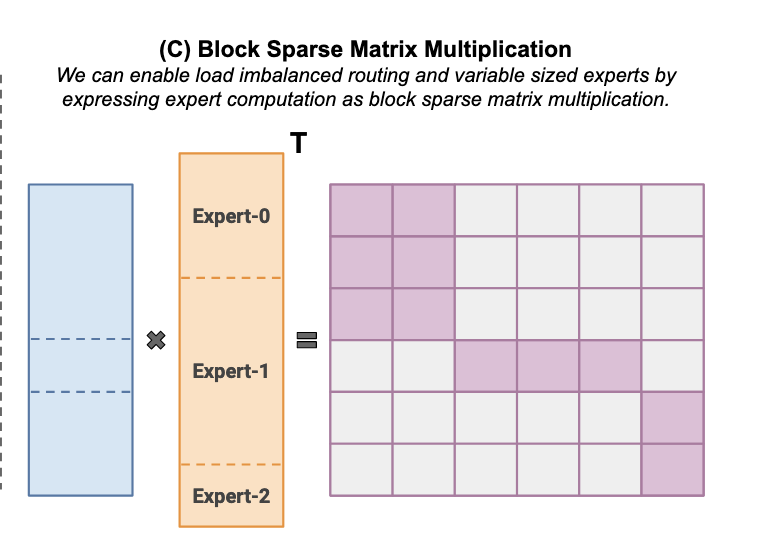

Last edited: December 12, 2025Standard MoEs either waste computation by padding unused capacity within each expert, or drop tokens assigned to an expert when it exceeds capacity (i.e. truncate so that we don’t have to pad too much).

Method

Instead of

we do

and leverage efficient block sparse multiplication to have variably-sized experts.

MOEReview Kaushik: Universal Subspace Hypothesis

Last edited: December 12, 2025One-Liner

There’s a low-rank “shared” universal subspace across many pretrained LMs, which could be thus leveraged to adapt a model to new tasks easier.

Notable Methods

Did a PCA, and projected variance from one architecture to others (i.e. LoRAs trained for different things).