Yao's Next-Bit Prediction Lemma

Last edited: August 8, 2025if you can prove that for every \(i\), you can’t predict the

ycomb

Last edited: August 8, 2025- vertibre backbone: 3 points to remember

- “we are in the business of looking for outliers”

- tarpit ides

- vision with world + good team

yet another flowchart abstraction

Last edited: August 8, 2025yafa!

Syntax

[ (Circle) -> [square] -> (Circle) | (Circle) ]

[ (circle) ]

[ <lt1>(self loop) -> <lt1> ]

EXPRESSION = EXPRESSION' ARROW NODE | EXPRESSION' ALTERNATION NODE | STRING

EXPRESSION' = EXPRESSION' ARROW NODE | EXPRESSION' ALTERNATION NODE | NODE

NODE = SQUARE | CIRCLE | ANCHOR

SQUARE = "[" EXPRESSION "] | ANCHOR "[" EXPRESSION "]

ANCHOR = "<" LABEL ">"

ALTERNATION = "|"

ARROW = SARROW | DARROW

SARROW = "->"

DARROW = "=>"

LABEL = r"[\w\d]+"

STRING = LABEL | "\"" ".*" "\""

Semantics

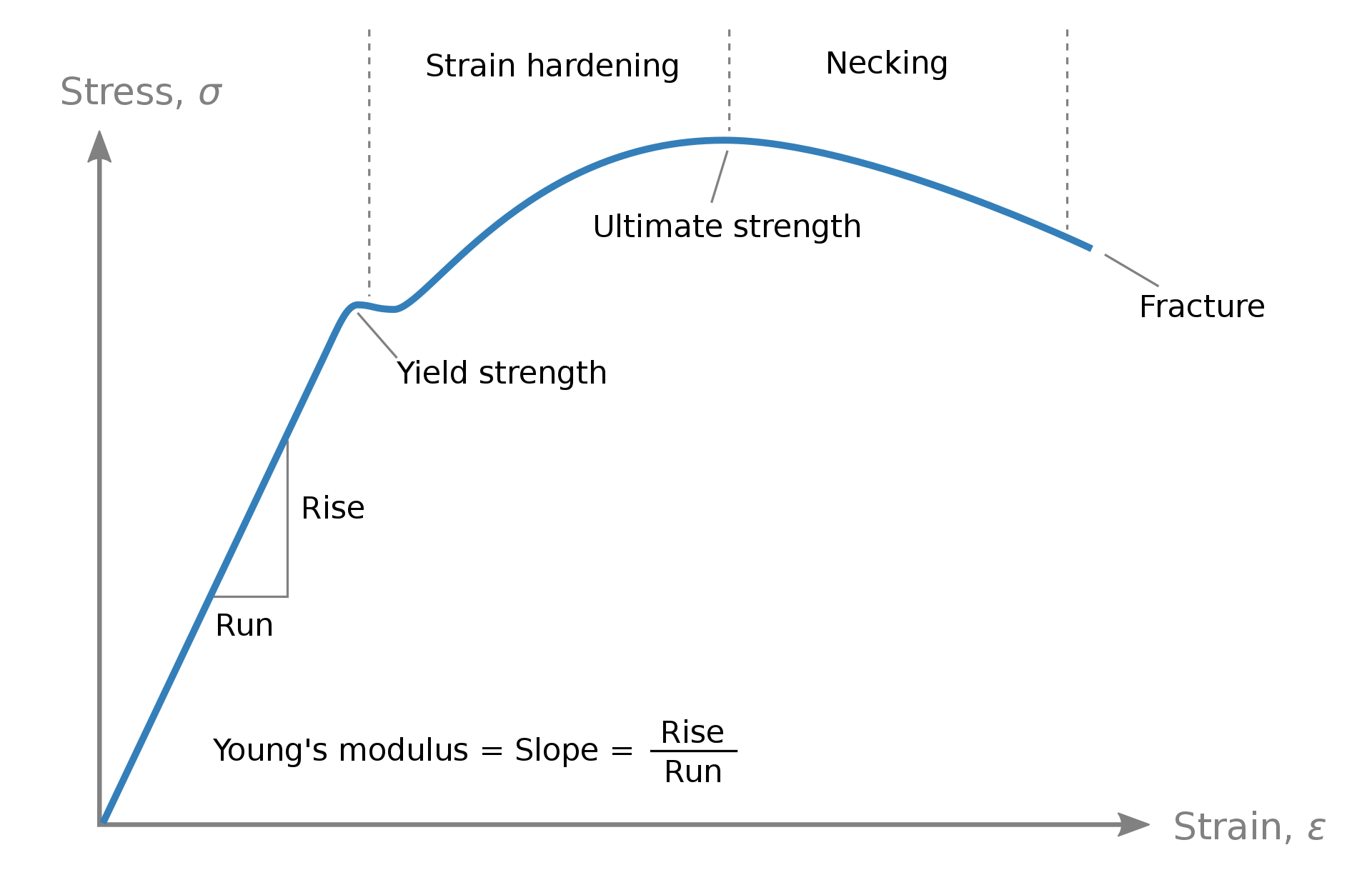

Young's Modulus

Last edited: August 8, 2025Young’s Modulus is a mechanical property that measures the stiffness of a solid material.

It measures the ratio between mechanical stress \(\sigma\) and the relative resulting strain \(\epsilon\).

It measures the ratio between mechanical stress \(\sigma\) and the relative resulting strain \(\epsilon\).

And so, very simply:

\begin{equation} E = \frac{\sigma }{\epsilon } \end{equation}

Thinking about this, silly puddy deforms very easily given a little stress, so it would have low Young’s Modulus (\(\sigma \ll \epsilon\)); and visa versa. https://aapt.scitation.org/doi/10.1119/1.17116?cookieSet=1

Yuan 2021

Last edited: August 8, 2025DOI: 10.3389/fcomp.2020.624488

One-Liner

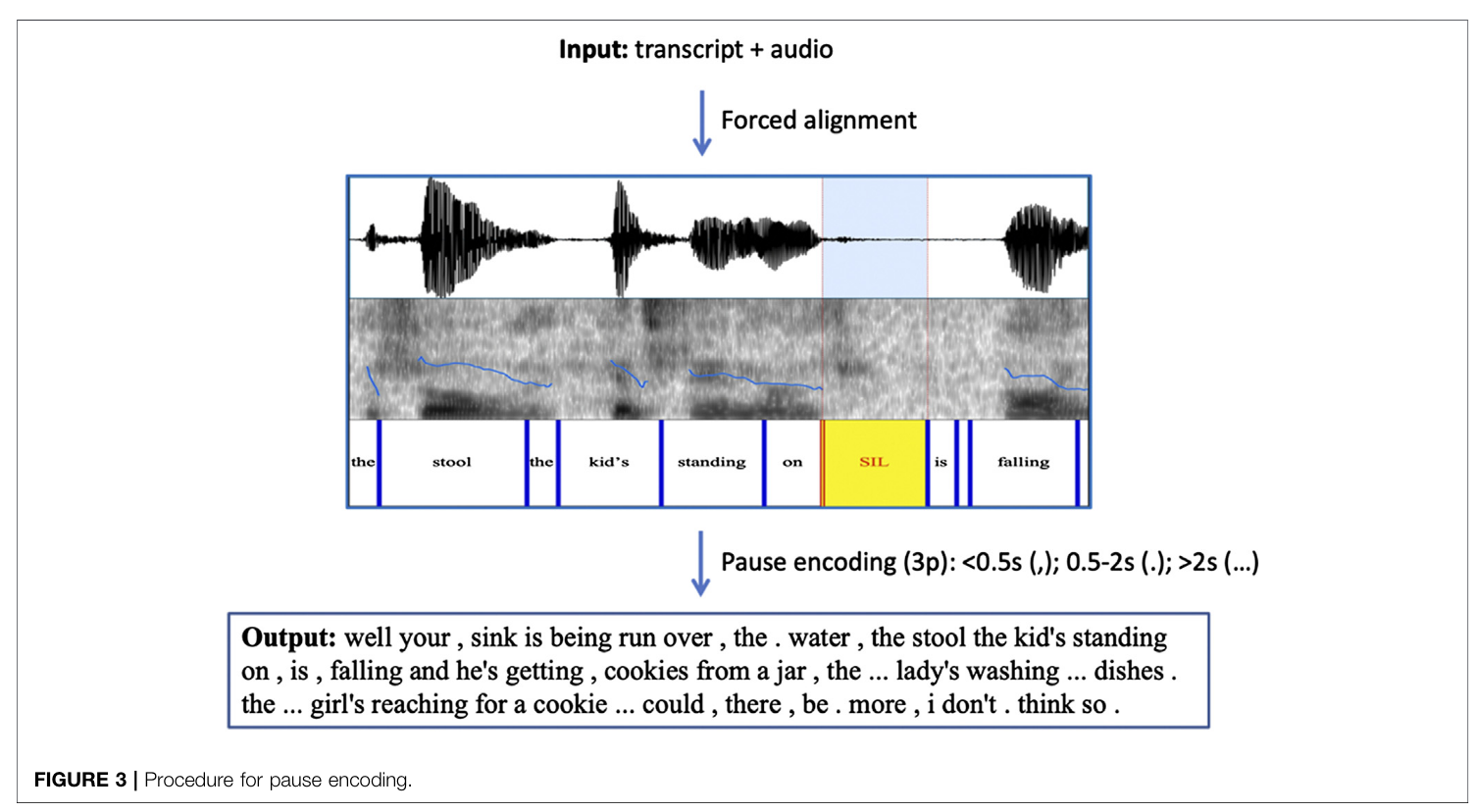

Used an ERNIE trained on transcripts for classification; inclusion of pause encoding made results better.

Novelty

- Instead of just looking at actual speech content, look at pauses specific as a feature engineering task

- \(89.6\%\) on the ADReSS Challenge dataset

Notable Methods

Applied FA with pause encoding with standard .cha semantics (short pauses, medium pauses, long pauses). Shoved all of this into an ERNIE.

Assay for performance was LOO